Conductive Melody

Uniting music, clothing, and artistic performance, Conductive Melody produces music and light that is played by the wearer and shared with an audience. Conductive Melody is an exploration in united wearable art, artistic performance, music interaction design, and machine learning. It debuted at the Make Fashion 2019 Runway Show.

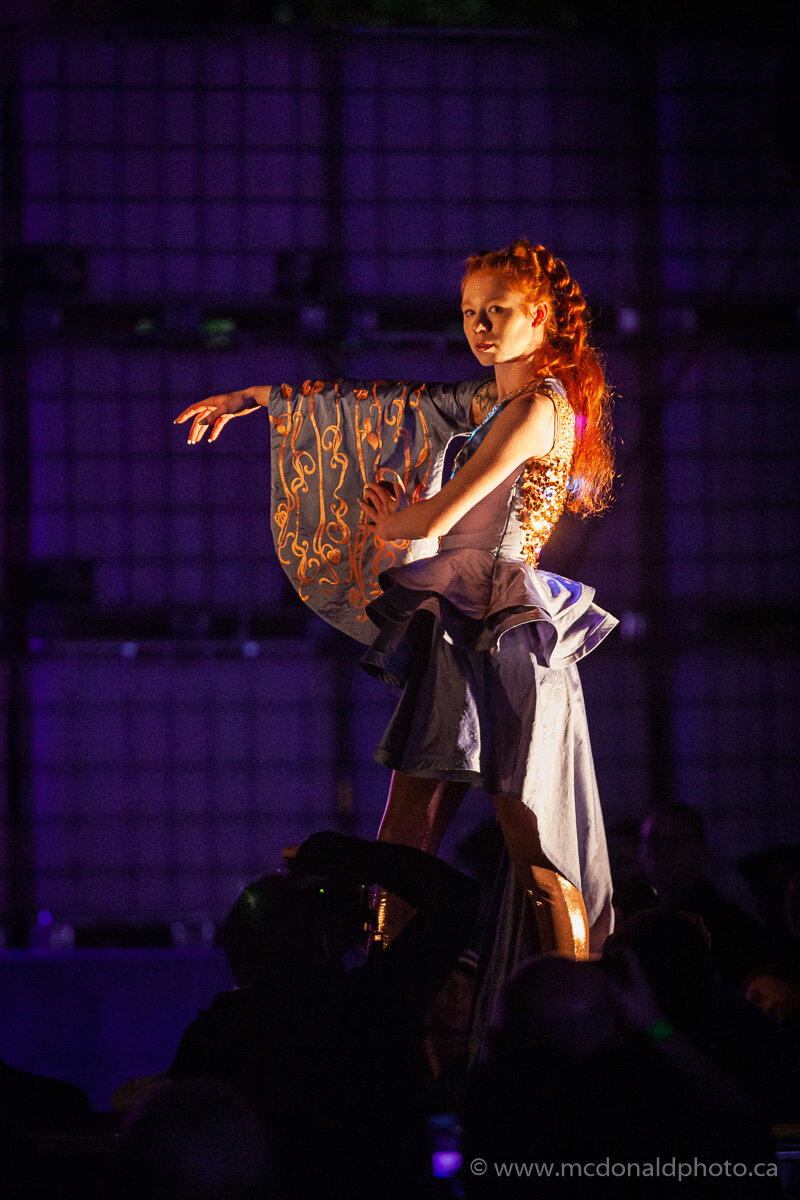

Conductive Melody at the Make Fashion 2019 runway show.

A dress made for Music Interaction

Conductive Melody uses a sleeve of laser cut conductive fabric as a capacitive touch musical interface. The conductive fabric interface is inspired by the strings of a harp and was designed to be scrolling and organic. The music is made through machine learning; a form of AI where a computer algorithm analyses and stores data over time and then uses this data to make decisions and predict future outcomes. An Arduino microcontroller captures touch inputs and a mini-computer, the Raspberry Pi, uses Piano Genie, a machine learning music interface, to expand input from the 8 capacitive touch inputs to a full 88 key piano in real time. The capacitive touch sensors also control the color and display of the lights on the front and back of the garment. The music can be played from the wearable speaker or through a computer or sound system.

System Diagram for Conductive Melody